To build a robust deep learning model, it can be much more than training or fine tuning some existing models (e.g. inception v3, resnet, LSTM, etc.) with your own dataset. These winning models are your best friend and can usually serve as base models.

Some Deep Learnings from Applying Deep Learning

Wei Guan, Machine Learning & AI Research Lead | The Climate Corporation

Reprinted with permission from The Climate Corporation's Climate FieldView blog:

More and more companies are building and applying deep learning models in their business. Several practical issues should be taken into consideration before these models are put into production. Consider this scenario: you may build a model that works perfectly with training and validation data, but it doesn’t perform well after deploying the model in real scenarios. Or, you may struggle with getting better performance compared to traditional machine learning models. While the latter case will make you rethink whether to invest more resourcing on this, the former situation is more risky and you may not realize it until you put your models into production.

Building Robust Deep Learning Models

To build a robust deep learning model, it can be much more than training or fine tuning some existing models (e.g. inception v3, resnet, LSTM, etc.) with your own dataset. These winning models are your best friend and can usually serve as base models. However, even for the most well-studied problems such as image classification or object detection, fine tuning these models could just be the first step. There are further important issues that should be considered.

The first and most important thing you should think about is whether the distribution of data you have in model development will be the same as the distribution of data in a real scenario, regardless how much data you have. I remember in one of Andrew Ng’s talks I attended about a year ago, he emphasized that your test data distribution should match your training data in order for your deep learning models to work well (or work as expected). While this makes sense, it may not be the case in the real world. It’s possible that you do not even know what distribution of the real data will be, and the distribution could also be changing as well.

Deep Learning for Real-time Crop Disease Identification

Take our crop disease identification research for example. We are diagnosing disease in real-time from pictures taken in the field. There are two main sources that could cause the distribution differences. The first one is how people take pictures. The way your target users take pictures may be different from the way those training pictures are taken. We know many convolutional models are not good at handling scale and rotation variances. If the variances are large, it will be a problem that could not be resolved by data augmentation, or by utilizing a transformation-based network such as spatial transformer network or deformable convolutional layers. One simple and efficient way to tackle this could be increasing the diversity of collected data and restricting the way user taking pictures.

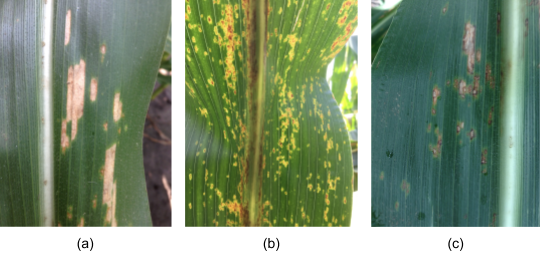

Figure 1: (a), (b) and (c) are 3 different types of corn hybrid. Grey Leaf Spot (GLS) symptoms (at similar disease stage) look very different on these hybrids. The training data usually have a limited number of hybrids.

The second, more challenging source of distribution difference is only having access to data from a subset of categories (which cannot represent the whole population). In the disease identification example, when we collect corn disease images, the images are only from certain types of corn hybrids (type of seed). The disease symptoms may look very different from hybrid to hybrid (as in Figure 1). Can we generalize our model to most or even all hybrids?

The answer is yes, but probably with some domain knowledge. The data distributions are different in raw image space, but this does not mean they have to be different in other spaces. If we can find some latent space where our training data can be a good representation of the general population, we can just build models in that latent space. Another way to tackle this is to make the learned features more general instead of specific to several hybrids or specific to your dataset. This can be done by enforcing certain regularizations or partially engineering the features rather than letting the model learn the features all by itself.

Figure 2: two examples of learned features. The learned features in (a) are more relevant while the features in (b) are less useful in a more general dataset.

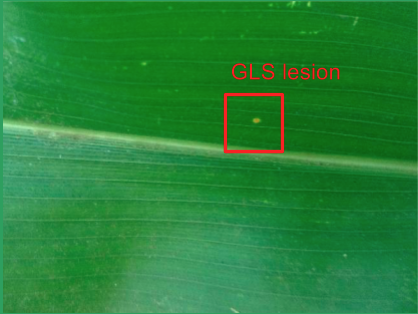

Another interesting issue can be the necessity of adding extra classes. For example, we like to have healthy-leaf class and non-leaf class in addition to different diseases. The non-leaf class can be trained with a diverse set of non-leaf pictures (you may be careful selecting these images). However, the healthy-leaf class is more tricky than you think. The reason is that it is not an exclusive class. Being more specific, diseased leaves may also contain tissues that are healthy (Figure 3). The way softmax classifier works is discriminative and exclusive, i.e., the one with the highest score will be selected as the final class. Whenever the healthy parts activate neurons more than the diseased parts do, the leaf will be classified as healthy.

Detection frameworks could be a good solution for the above issue. This leads to another question: detection vs. classification. A detection framework does allow you to identify and locate multiple objects in an image, but it’s also a slightly harder problem, so the accuracy is not as high. Moreover, many popular frameworks today like faster R-CNN, single shot detection (SSD), R-FCN, or YOLO, do require ground truth of bounding boxes, which might be costly to have. Besides, unlike objects in a dataset like ImageNet, COCO, many objects may not have a well-defined shape. It makes less sense to model the conditional probability of a bounding box given part of the object (a subwindow or proposal in an image). There are certainly ways to work around this but the work and effort may be more complex.

Some less critical, but also common, challenges include: what if the diversity is too large within a class? Does it benefit to divide further into subclasses so the features are more consistent? What if some classes have considerably fewer training samples? What’s the best way to manage cases when certain misclassification is less tolerable than others? Those are just a few of many practical issues to consider.

The popularity of generative adversarial networks (GANs) and improved variants like Wasserstain-GAN provide us a new way to model data distribution, synthesize new data and leverage large amounts of unlabeled data. Though semi-supervised learning does require further research, it could be helpful to try out these models to see if the unlabeled data does boost the performance of your model. In addition, the recent proposal of Capsule Network by Geoffrey Hinton has gained much attention. It is claimed that less training data is required to train the network by explicitly modeling the relationship of detected features. While it is an interesting new approach, the CapsNet is still in exploratory stage and needs to be further tested on more complex types of images.

.png)

Figure 4: examples of generated images by GAN. It enriches the dataset in a different way than traditional data augmentation such as random scaling, cropping, etc.

While convolutional models are successful in extracting useful features across different vision problems, this is not the case for sequential data. Sequential data is more problem dependent and each type of data may have its own characteristics. LSTM achieving good results in language processing does not necessarily mean it will be successful for other types of sequence data, and it may not be helpful to add more hidden nodes or stack multiple LSTM layers. Getting the right architecture may be your first critical and time consuming step.

There is a lot of buzz about AI and people are interested in trying out deep learning models for different problems. However, to solve a practical problem, always try your best using traditional machine learning methods (unless they are classic DL problems). In many cases it can give you better insight on whether a deep neural network is a promising direction to go with, and it can serve as a baseline model. If your traditional model is equally good, and the features are more interpretable, you do not want to deploy a black-box (or grey-box) model just for marketing purposes. Or if you do, you may need to take time to apply some deep learnings to your deep learning models.

About Wei Guan, Machine Learning & AI Research Lead

About Wei Guan, Machine Learning & AI Research Lead

About the author: Wei Guan is leading machine learning and AI research as part of the Geospatial Science Team at The Climate Corporation. He is passionate in working on challenging data science problems across a variety of domains. Wei received his PhD in computer science from University of Southern California, and has many years of practical experience in building scalable and robust statistical, machine learning and deep learning models.

The content & opinions in this article are the author’s and do not necessarily represent the views of AgriTechTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product